Known issues in Cloudera Data Warehouse on cloud

Review the known issues in this release of the Cloudera Data Warehouse service on Cloudera on cloud.

- Known issues identified in the December 5, 2024 release

- Known issues identified before the December 5, 2024 release

- Technical Service Bulletins

Known issues identified in the December 5, 2024 release

- Database Catalog fails to start after upgrading Data Lake to Azure Flexible Server

- After you upgrade your Data Lake from Azure Single Server to Azure Flexible Server, the Cloudera Data Warehouse Database Catalog fails to start.

- Database Catalog goes to an error state after a Data Lake resize

- After a Cloudera Data Lake resize, the Cloudera Data Warehouse Database Catalog needs to be restarted to consider the change in Data Lake configuration. You can restart the Database Catalog using the CDP CLI or by using the Stop and Start functionality from the Cloudera Data Warehouse UI. However, irrespective of how the Database Catalog is restarted, it ends up in an "Error" state.

- Unable to resize Workload Aware Autoscaling enabled Impala Virtual Warehouse using the UI

- If you are using the Cloudera Data Warehouse UI to resize an Impala Virtual Warehouse that is enabled for Workload Aware Autoscaling, you may notice a message in the Sizing and Scaling tab of the Virtual Warehouse Details page — "Some operations are still running. Please wait...", and you are unable to proceed further although the Virtual Warehouse is in a healthy state.

- Impala Virtual Warehouse fails to start due to missing helm release

- A failed helm release status prevents helm upgrade operations

from executing successfully. This causes the Impala Virtual Warehouse to fail with the

following

error:

time="2024-07-04T13:32:46Z" level=info msg="helm release not found for input executor group: \"impala-executor-000\" in service impala-executor" time="2024-07-04T13:32:46Z" level=error msg="addExecutorGroups failed with err: \"helm release not found for \\\"impala-executor-000\\\"\""

Known issues identified before the December 5, 2024 release

- DWX-19451: Cloudera Data Visualization restore job can fail with ignorable errors

- After a successful Cloudera Data Visualization

restoration job, the restore job could be in a failed state with the log displaying

ignorable

errors.

pg_restore: error: could not execute query: ERROR: sequence "jobs_joblog_id_seq" does not exist Command was: DROP SEQUENCE public.jobs_joblog_id_seq; pg_restore: error: could not execute query: ERROR: table "jobs_joblog" does not exist Command was: DROP TABLE public.jobs_joblog; pg_restore: error: could not execute query: ERROR: sequence "jobs_jobcontent_id_seq" does not exist Command was: DROP SEQUENCE public.jobs_jobcontent_id_seq; ....... .......This issue occurs because the restore job issues commands to DROP all the objects that will be restored, and if any of these objects do not exist in the destination database, such ignorable errors are reported.

This has no functional impact on the restored Cloudera Data Visualization application. It is noticed that all the backed up queries, datasets, connections, and dashboards are restored successfully and Cloudera Data Visualization is available for new queries.

- CDPD-75422: Impala schema case sensitivity issue with workaround

- Impala's schema is case insensitive, causing errors with mixed case schema elements created through Spark during predicate pushdown.

- DWX-18843: Unable to read Iceberg table from Hive Virtual Warehouse

- If you have used Apache Flink to insert data into an Iceberg table that is created from Hive, you cannot read the Iceberg table from the Hive Virtual Warehouse.

- DWX-18489: Hive compaction of Iceberg tables results in a failure

- When Cloudera Data Warehouse and Cloudera Data Hub are deployed in the same environment and use the same

Hive Metastore (HMS) instance, the Cloudera Data Hub compaction workers

can inadvertently pick up Iceberg compaction tasks. Since Iceberg compaction is not yet

supported in the latest Cloudera Data Hub version, the compaction tasks

will fail when they are processed by the Cloudera Data Hub compaction

workers.

In such a scenario where both Cloudera Data Warehouse and Cloudera Data Hub share the same HMS instance and there is a requirement to run both Hive ACID and Iceberg compaction jobs, it is recommended that you use the Cloudera Data Warehouse environment for these jobs. If you want to run only Hive ACID compaction tasks, you can choose to use either the Cloudera Data Warehouse or Cloudera Data Hub environments.

- DWX-18854: Compaction cleaner configuration

- The compaction cleaner is turned off by default in the Cloudera Data Warehouse database catalog, potentially causing compaction job failures.

- DWX-12703: Hue connects to only one Impala coordinator in Active-Active mode

- You may not see all Impala queries that have run on the Virtual Warehouse from the Impala tab on the Hue Job Browser. You encounter this on an Impala Virtual Warehouse that has Impala coordinator configured in an active-active mode. This happens because Hue fetches this information from only one Impala coordinator that is active.

- Known limitation: Cloudera Data Warehouse does not support S3 Express One Zone buckets

- Cloudera does not

recommend deploying the Data Lake on S3 Express One Zone buckets. Cloudera Data Warehouse cannot read content present in the S3 Express One Zone

buckets. The following limitations apply when using S3 Express buckets:

- You can only use S3 Express buckets with Cloudera Data Hubs running Runtime 7.2.18 or newer. Data services do not support it, currently.

- S3 Express buckets may not be used for logs and backups.

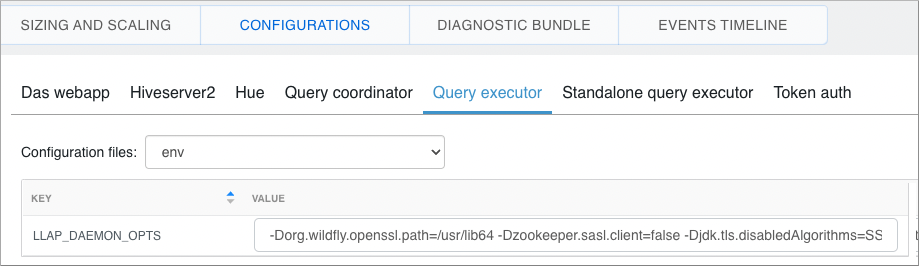

- DWX-15112: Enterprise Data Warehouse database configuration problems after a Helm-related rollback

- If a Helm rollback fails due to an incorrect Enterprise Data Warehouse database configuration, the Virtual Warehouse and Database Catalog roll back to a previous configuration. The incorrect Enterprise Data Warehouse configuration persists, and can affect subsequent edit, upgrade, and rebuild operations on the rolled-back Virtual Warehouse or Database Catalog.

- DWX-17455: ODBC client using JWT authentication cannot connect to Impala Virtual Warehouse

- If you are using the Cloudera ODBC Connector and JWT authentication to connect to a Cloudera Data Warehouse Impala Virtual Warehouse where the Impala coordinators are configured for high availability in an active-active mode, the connection results in a "401 Unauthorized" error. The error is not seen on Impala Virtual Warehouses that have an active-passive high availability or where high availability is disabled.

- HIVE-28055: Merging Iceberg branches requires a target table alias

- Hive supports only one level of qualifier when referencing

columns. In other words only one dot is accepted. For example,

select table.col from ...;is allowed.select db.table.colis not allowed. Using the merge statement to merge Iceberg branches without a target or source table alias causes an exception:org.apache.hadoop.hive.ql.parse.SemanticException: ... Invalid table alias or column reference ... - Branch FAST FORWARD does not work as expected

- The Apache Iceberg spec indicates you can use either one or two

arguments to fast forward a branch. The following example shows using two arguments:

ALTER TABLE <name> EXECUTE FAST-FORWARD 'x' 'y'However, omitting the second branch name, does not work as documented by Apache Iceberg. The named branch is not fast-forwarded to the current branch. An exception occurs at the Iceberg level.

- DWX-17613: Generic error message is displayed when you click on the directory you don't have access to on a RAZ cluster

- You see the following error message when you click on an ABFS directory to which you do not have read/write permission on the ABFS File Browser in Hue: There was a problem with your request. This message is generic and does not provide insight into the actual issue.

- DWX-17109: ABFS File Browser operations failing intermittently

- You may encounter intermittent issues while performing typical operations on files and directories on the ABFS File Broswer, such as moving or renaming files.

- CDPD-27918: Hue does not automatically pick up RAZ HA configurations

- On a Cloudera on cloud environment in which you have configured RAZ in High Availability mode, Hue in Cloudera Data Warehouse does not pick up all the RAZ host URLs automatically. Therefore, if a RAZ instance to which Hue is connected goes down, Hue becomes unavailable.

- CDPD-66779: Partitioned Iceberg table not getting loaded with insert select query from Hive

- If you create a partitioned table in Iceberg and then try to

insert data from another table as shown below, an error occurs.

insert into table partition_transform_4 select t, ts from vectortab10k; - DWX-17703: Non-HA Impala Virtual Warehouse on a private Azure Kubernetes Service (AKS) setup fails

- When 'Refresh' and 'Stop' operations run in parallel, Impala might move into an error state. The Refresh operation might think that Impala is in an error state as the coordinator pod is missing.

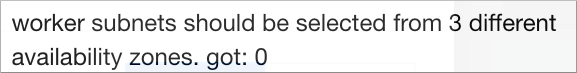

- DWX-15145: Environment validation popup error after activating an environment

- Activating an environment having a public load balancer can

cause an environment validation popup error.To reproduce this problem 1) Create a data lake. 2) Activate an environment having a public load balancer deployment type and subnets in three different availability zones. A environment validation popup can occur even through subnets are in different availability zones. Several different popups can occur, including the following one:

- DWX-15144: Virtual Warehouse naming restrictions

- You cannot create a Virtual Warehouse having the same name as another Virtual Warehouse even if the like-named Virtual Warehouses are in different environments. You can create a Database Catalog having the same name as another Database Catalog if the Database Catalogs are in different environments.

- DWX-13103: Cloudera Data Warehouse environment activation problem

- When Cloudera Data Warehouse environments are activated, a race condition can occur between the prometheus pod and istiod pod. The prometheus pod can be set up without an istio-proxy container, causing communication failures to/from prometheus to any other pods in the Kubernetes cluster. Data Warehouse prometheus-related functionalities, such as autoscaling, stop working. Grafana dashboards, which get metrics from prometheus, are not populated.

- AWS availability zone inventory issue

- In this release, you can select a preferred availability zone when you create a Virtual Warehouse; however, AWS might not be able to provide enough compute instances of the type that Cloudera Data Warehouse needs.

- DWX-7613: CloudFormation stack creation using AWS CLI broken for Cloudera Data Warehouse Reduced Permissions Mode

- If you use the AWS CLI to create a CloudFormation stack to

activate an AWS environment for use in Reduced Permissions Mode, it fails and returns

the following error:

The default value of SdxDDBTableName is not being set. If you create the CloudFormation stack using the AWS Console, there is no problem.An error occurred (ValidationError) when calling the CreateStack operation: Parameters: [SdxDDBTableName] must have values - Incorrect diagnostic bundle location

- The path you see to the diagnostic bundle is wrong when you

create a Virtual Warehouse, collect a diagnostic bundle of log files for

troubleshooting, and click

. Your storage account name is missing from the beginning of the

path.

. Your storage account name is missing from the beginning of the

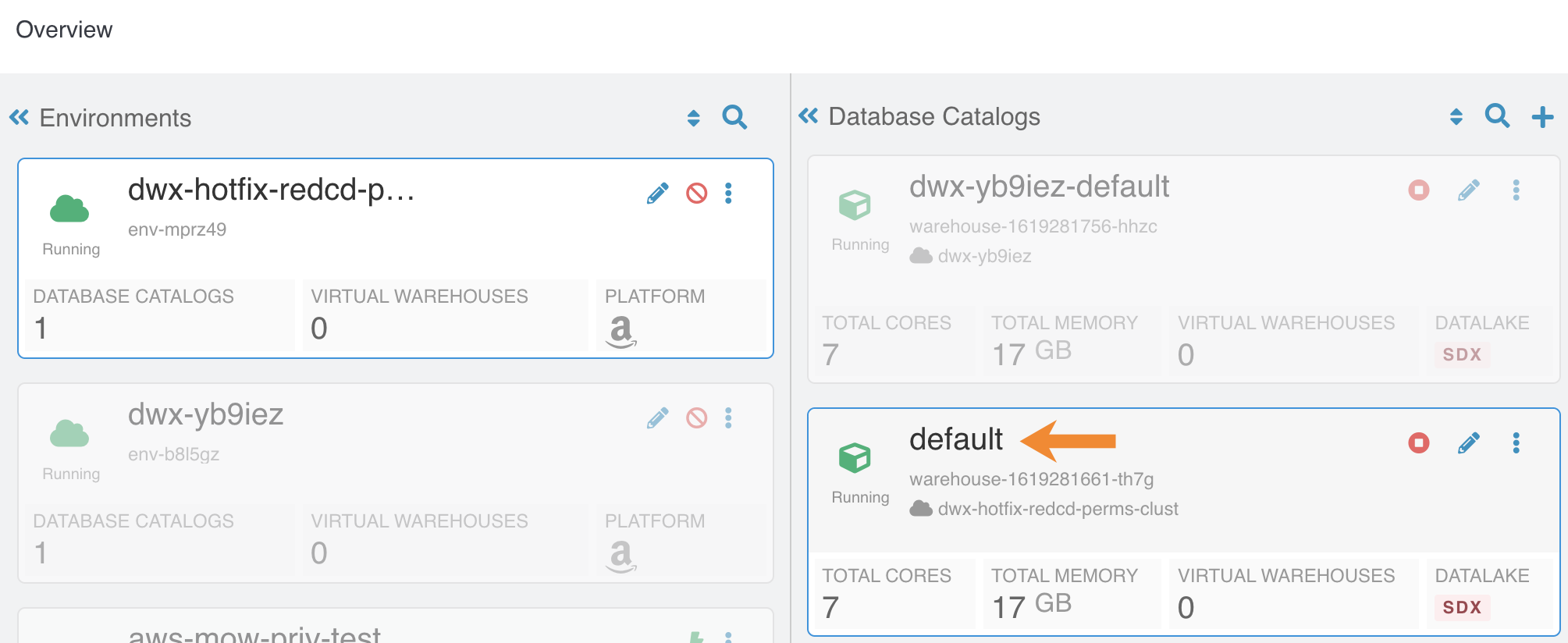

path. - DWX-7349: In reduced permissions mode, default Database Catalog name does not include the environment name

- When you activate an AWS environment in reduced permissions

mode, the default Database Catalog name does not include the environment name:

This does not cause collisions because each Database Catalog named "default" is associated with a different environment. For more information about reduced permissions mode, see Reduced permissions mode for AWS environments.

- DWX-15064: Hive Virtual Warehouse stops but appears healthy

- Due to an istio-proxy problem, the query coordinator can unexpectedly enter a not ready state instead of the expected error-state. Subsequently, the Hive Virtual Warehouse stops when reaching the autosuspend timeout without indicating a problem.

- DWX-14452: Parquet table query might fail

- Querying a table stored in Parquet from Hive might fail with the following exception message: java.lang.RuntimeException: java.lang.StackOverflowError. This problem can occur when the IN predicate has 243 values or more, and a small stack (-Xss = 256k) is configured for Hive in Cloudera Data Warehouse.

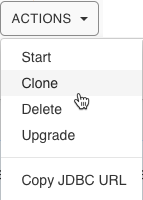

- DWX-5926: Cloning an existing Hive Virtual Warehouse fails

- If you have an existing Hive Virtual Warehouse that you clone by selecting Clone from the drop-down menu, the cloning process fails. This does not apply to creating a new Hive Virtual Warehouse.

- DWX-2690: Older versions of Beeline return SSLPeerUnverifiedException when submitting a query

- When submitting queries to Virtual Warehouses that use Hive,

older Beeline clients return an SSLPeerUnverifiedException

error:

javax.net.ssl.SSLPeerUnverifiedException: Host name ‘ec2-18-219-32-183.us-east-2.compute.amazonaws.com’ does not match the certificate subject provided by the peer (CN=*.env-c25dsw.dwx.cloudera.site) (state=08S01,code=0) - DWX-16895: Incorrect status of Hue pods when you edit the Hue instance properties

- When you update a configuration of a Hue instance that is deployed at the environment level, such as increasing or decreasing the size of the Hue instance, you see a success message on the Cloudera Data Warehouse UI. After some time, the status of the Hue instance also changes from “Updating” to “Running”. However, when you list the Hue pods using kubectl, you see that not all backend pods are in the running state–a few of them are still in the init state.

- DWX-16893: A user can see all the queries in Job browser

- In a Hue instance deployed at the environment level, by design, the Hue instances must not share the saved queries and query history with other Hue instances even for the same user. However, a logged in user is able to view all the queries executed by that user on all the Virtual Warehouses on a particular Database Catalog.

- Delay in listing queries in Impala Queries in the Job browser

- Listing an Impala query in the Job browser can take an inordinate amount of time.

- DWX-14927: Hue fails to list Iceberg snapshots

- Hue does not recognize the Iceberg history queries from Hive to

list table snapshots. For example, Hue indicates an error at the . before history when

you run the following query.

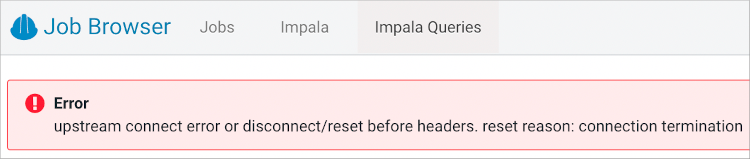

select * from <db_name>.<table_name>.history - DWX-14968: Connection termination error in Impala queries tab after Hue inactivity

- To reproduce the problem: 1) In the Impala job browser, navigate

to Impala queries. 2) Wait for a few minutes.After a few minutes of inactivity, the follow error is displayed:

- DWX-15115: Error displayed after clicking on hyperlink below Hue table browser

- In Hue, below the table browser, clicking the hyperlink to a location causes an HTTP 500 error because the file browser is not enabled for environments that are not Ranger authorized (RAZ).

- DWX-15090: CSRF error intermittently seen in the Hue Job Browser

- You may intermittently see the “403 - CSRF” error on the Hue web interface as well as in the Hue logs after running Hive queries from Hue.

- DWX-8460: Unable to delete, move, or rename directories within the S3 bucket from Hue

- You may not be able to rename, move, or delete directories within your S3 bucket from the Hue web interface. This is because of an underlying issue, which will be fixed in a future release.

- DWX-6674: Hue connection fails on cloned Impala Virtual Warehouses after upgrading

- If you clone an Impala Virtual Warehouse from a recently upgraded Impala Virtual Warehouse, and then try to connect to Hue, the connection fails.

- DWX-5650: Hue only makes the first user a superuser for all Virtual Warehouses within a Data Catalog

- Hue marks the user that logs in to Hue from a Virtual Warehouse

for the first time as the Hue superuser. But if multiple Virtual Warehouses are

connected to a single Data Catalog, then the first user that logs in to any one of the

Virtual Warehouses within that Data Catalog is the Hue superuser.

For example, consider that a Data Catalog DC-1 has two Virtual Warehouses VW-1 and VW-2. If a user named John logs in to Hue from VW-1 first, then he becomes the Hue superuser for all the Virtual Warehouses within DC-1. At this time, if Amy logs in to Hue from VW-2, Hue does not make her a superuser within VW-2.

- DWX-17210, DWX-13733: Timeout issue querying Iceberg tables from Hive

- When querying Iceberg tables from Hive, the queries can faile due to a timeout issue.

- DWX-14163: Limitations reading Iceberg tables in Avro file format from Impala

- The Avro, Impala, and Iceberg specifications describe some limitations related to Avro, and those limitations exist in Cloudera. In addition to these, the DECIMAL type is not supported in this release.

- DEX-7946: Data loss during migration of a Hive table to Iceberg

- In this release, by default the table property 'external.table.purge' is set to true, which deletes the table data and metadata if you drop the table during migration from Hive to Iceberg.

- DWX-13062: Hive-26507 Converting a Hive table having CHAR or VARCHAR columns to Iceberg causes an exception

- CHAR and VARCHAR data can be shorter than the length specified by the data type. Remaining characters are padded with spaces. Data is converted to a string in Iceberg. This process can yield incorrect results when you query the converted Iceberg table.

- Knowledge article

- For the latest update on this issue see the corresponding Knowledge article: TSB 2023-684: Automatic metadata synchronization across multiple Impala Virtual Warehouses (in Cloudera Data Warehouse on cloud) may encounter an exception

- IMPALA-11045: Impala Virtual Warehouses might produce an error when querying transactional (ACID) table even after you enabled the automatic metadata refresh (version DWX 1.1.2-b2008)

- Impala doesn't open a transaction for select queries, so you might get a FileNotFound error after compaction even though you refreshed the metadata automatically.

- Impala Virtual Warehouses might produce an error when querying transactional (ACID) tables (DWX 1.1.2-b1949 or earlier)

- If you are querying transactional (ACID) tables with an Impala Virtual Warehouse and compaction is run on the compacting Hive Virtual Warehouse, the query might fail. The compacting process deletes files and the Impala Virtual Warehouse might not be aware of the deletion. Then when the Impala Virtual Warehouse attempts to read the deleted file, an error can occur. This situation occurs randomly.

- DWX-6674: Hue connection fails on cloned Impala Virtual Warehouses after upgrading

- If you clone an Impala Virtual Warehouse from a recently upgraded Impala Virtual Warehouse, and then try to connect to Hue, the connection fails.

- Data caching:

- This feature is limited to 200 GB per executor, multiplied by the total number of executors.

- Sessions with Impala continue to run for 15 minutes after the connection is disconnected.

- When a connection to Impala is disconnected, the session

continues to run for 15 minutes in case the user or client can reconnect to the same

session again by presenting the

session_token. After 15 minutes, the client must re-authenticate to Impala to establish a new connection.

Technical Service Bulletins

- TSB 2023-684: Automatic metadata synchronization across multiple Impala Virtual Warehouses (in Cloudera Data Warehouse on cloud) may encounter an exception

- The Cloudera Data Warehouse

on cloud 2023.0.14.0 (DWX-1.6.3) version incorporated a

feature for performing automatic metadata synchronization across multiple Apache

Impala (Impala) Virtual Warehouses. The feature is enabled by default, and relies on the Hive

MetaStore events. When a certain sequence of Data Definition Language (DDL) SQL

commands are executed as described below, users may encounter a

java.lang.NullPointerException (NPE). The exception causes the event processor to stop

processing other metadata operations.

If a CREATE TABLE command (not CREATE TABLE AS SELECT) is followed immediately (approximately within 1 second interval) by INVALIDATE METADATA or REFRESH TABLE command on the same table (either on the same Virtual Warehouse or on a different one), there is a possibility that the second command will not find the table in the catalog cache of a peer Virtual Warehouse and generate an NPE.

- Knowledge article

- For the latest update on this issue see the corresponding Knowledge article: TSB 2023-684: Automatic metadata synchronization across multiple Impala Virtual Warehouses (in Cloudera Data Warehouse on cloud) may encounter an exception